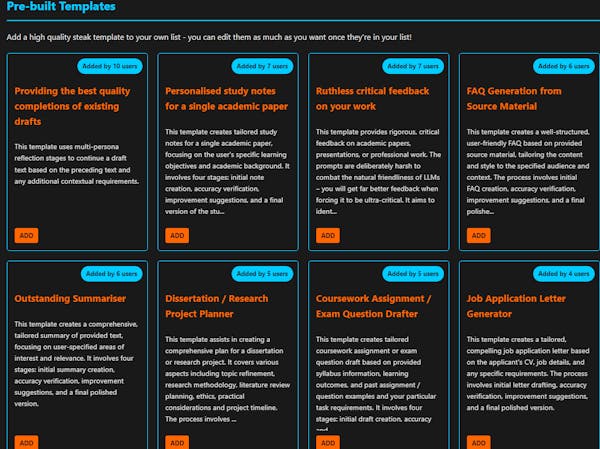

New users get 50 credits Simplify automating critique, reflection, and improvement, aka getting the model to 'think before it speaks', for far superior gen AI outputs. Choose from pre-built multi-agent templates or create your own with the help of Claude.I built this app for myself because I was getting bored of having to hunt down my prompt templates and copy/paste them to take advantage of the chain-of-thought / critique / reflection / improvement boost from LLMs. It automates the multi-agent process as well as making it easy to add and refine prompt templates. Each agent is 'dedicated' to a task, e.g. accuracy verification, improvement suggestions, polishing off, and each one displays so you can see the AI 'showing its' working'. I use it constantly for tasks where I want the best results and I personally can't get enough of it. It's expensive ($0.75 for the best quality 4-agent run) because it absolutely demolishes tokens, but for me it's a no brainer. Had a few people see it and basically demand I make it available publicly, so that's why it's here!@leemager Best wishes from my side! Whenever I hear about an LLM, I get interested in using it and will get back with my thoughtsAre the prompt templates built for a specific domain or are they generalized? Congratulations on the launch!@yashaswini_ippili The app has full customisability for prompt templates, you can build your own for any task you want and then it's yours to refine as you go on. So far there are around a dozen prebuilt ones just to help get people started. But even for those new to prompt engineering with chain of thought, the app gives you the option to generate a new multi-agent prompts using good practice for a draft template on whatever task you want.I appreciate the effort you have put into making this app. The idea of having dedicated agents for different tasks sounds incredibly useful.This is a fantastic initiative, @leemager! The way you've simplified the automation of critique and reflection in generative AI could be a game-changer for anyone looking to enhance their output. Love the idea of having dedicated agents for specific tasks like accuracy verification and improvement suggestions; it really mimics a more interactive and thoughtful creative process.

I can see how this would seriously save time for many Makers and help elevate the quality of content generated. Plus, the transparency of seeing the AI 'show its working' is such a clever touch—definitely aligns well with the principles of iterative improvement! While the cost may seem high at first glance, the ROI on quality results and enhanced efficiency might just make it worthwhile for a lot of users. Excited to see more feedback once it's live!