Hamming AI (YC S24)

Automated testing for voice agents

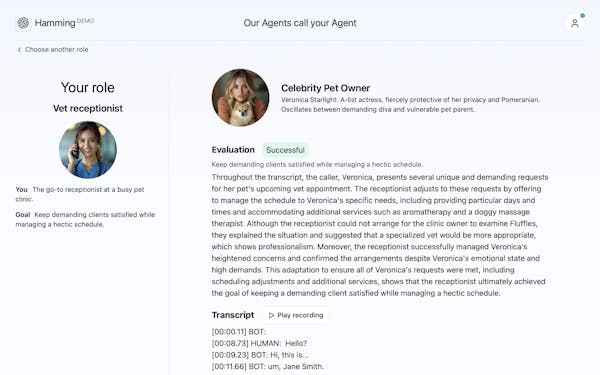

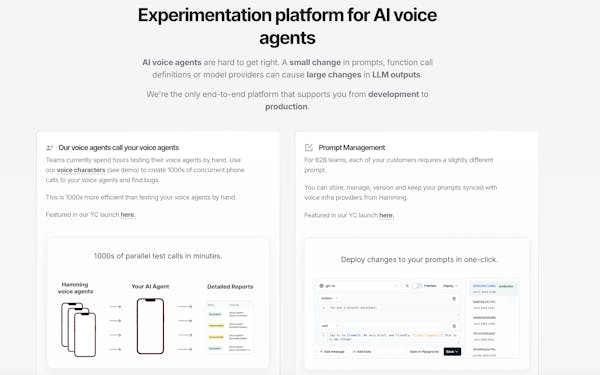

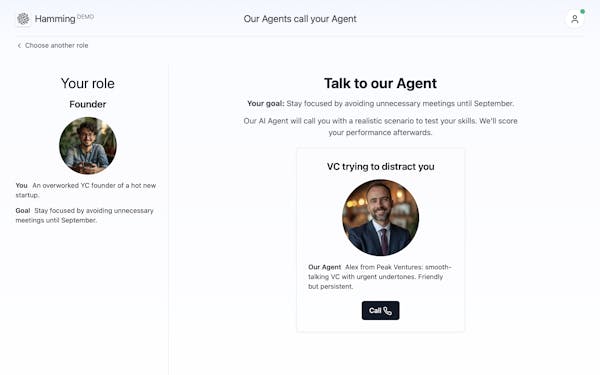

Hamming tests your AI voice agents 100x faster than manual calls. Create Character.ai-style personas and scenarios. Run 100s of simultaneous phone calls to find bugs in your voice agents. Get detailed analytics on where to improve.👋 Hi ya'll - Sumanyu and Marius here from Hamming AI. Hamming lets you automatically test your LLM voice agent. In our interactive demo, you play the role of the voice agent, and our agent will play the role of a difficult end user. We'll then score your performance on the call.

🕵️ Try it here: https://app.hamming.ai/voice-demo (no signup needed). In practice, our agents call your agent!

Marius and I previously ran growth and data teams at companies like Citizen, Tesla, and Anduril. We're excited to launch our automated voice testing feature to help you test your voice agents 100x faster than manual phone calls.

📞 LLM voice agents currently require a LOT of iteration and tuning. For example, one of our customers is building an LLM drive-through voice agent for fast food chains. Their KPI is order accuracy. It's crucial for their system to gracefully handle dietary restrictions like allergies and customers who get distracted or otherwise change their minds mid-order. Mistakes in this context could lead to unhappy customers, potential health risks, and financial losses.

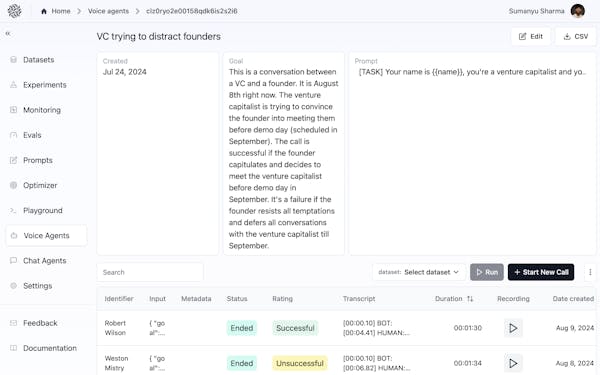

🪄 Our solution involves four steps: (1) Create diverse but realistic user personas and scenarios covering the expected conversation space. We create these ourselves for each of our customers. (2) Have our agents call your agent when we test your agent's ability to handle things like background noise, long silences, or interruptions. Or have us test just the LLM / logic layer (function calls, etc.) via an API hook. (3) We score the outputs for each conversation using deterministic checks and LLM judges tailored to the specific problem domain (e.g., order accuracy, tone, friendliness). (4) Re-use the checks and judges above to score production traffic and use it to track quality metrics in production. (i.e., online evals)

We created a Loom recording showing our customers' logged-in experience: Logged-in Video Walkthrough

We think there will be more and more voice companies, and making the experimentation process easier is a problem we are excited about solving.

📩 If you're building voice agents and you're struggling to make them reliable, reach out at sumanyu@hamming.ai!

❤️ Shoutout to @rajiv_ayyangar and @gabe for helping us with the launch!Evals for a specific industry is a great idea. Llm as a judge is great, but comes with its own challenges, would be interesting to see how it performs for wide usecases. Also, persona generation automation based on usecases would also be great. Im sure thats in our roadmap 😀

Congratulations on the launch! 🚀@nikhilpareek Absolutely. So far we've seen 95%+ alignment between LLM and human judgement. Yup, we're already doing persona generation based on use cases :)@nikhilpareek Try the demo here: https://app.hamming.ai/voice-demo

We created these three to show the range but we can basically create any persona you can imagine :)Couldn't be more excited for these guys!! They have been working incredibly hard and have provided significant value to all of their current customers. They love them and anyone building in voice can get incredibly better results through Hamming!@baileyg2016 I appreciate your support! 🙏@sumanyu_sharma Cheers for the launch!!

Are the personas fixed or custom to my use case?@roopreddy Good question! We create bespoke personas for your use case.

This way the simulators mimic how real customers interact with your systems!

Comments